强化学习-直观理解

不用告诉该怎么做,而是给定奖励函数,什么时候做好。

回归

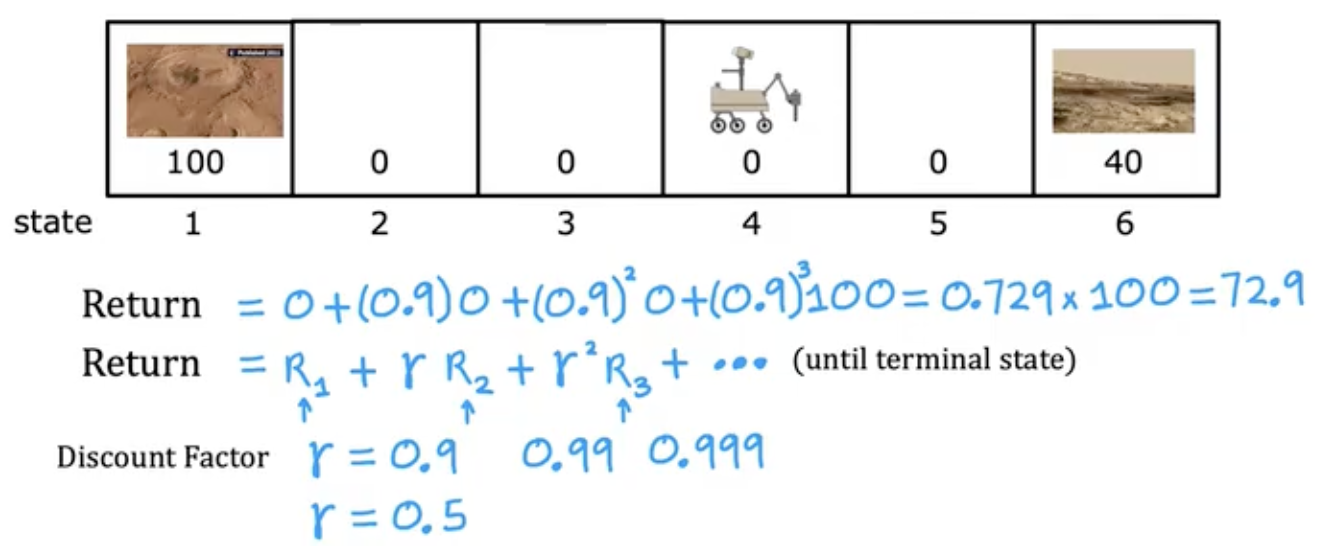

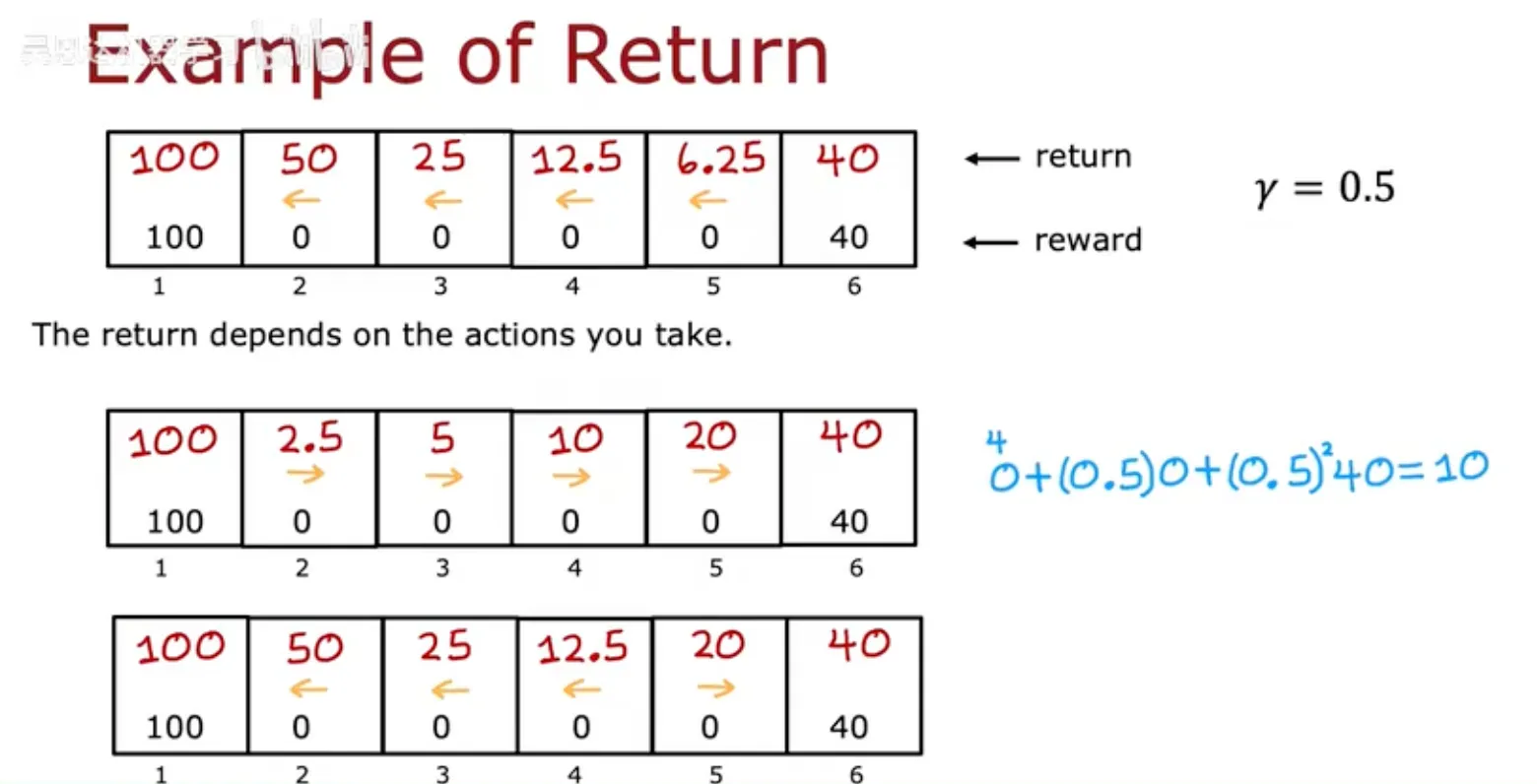

增加折现因子

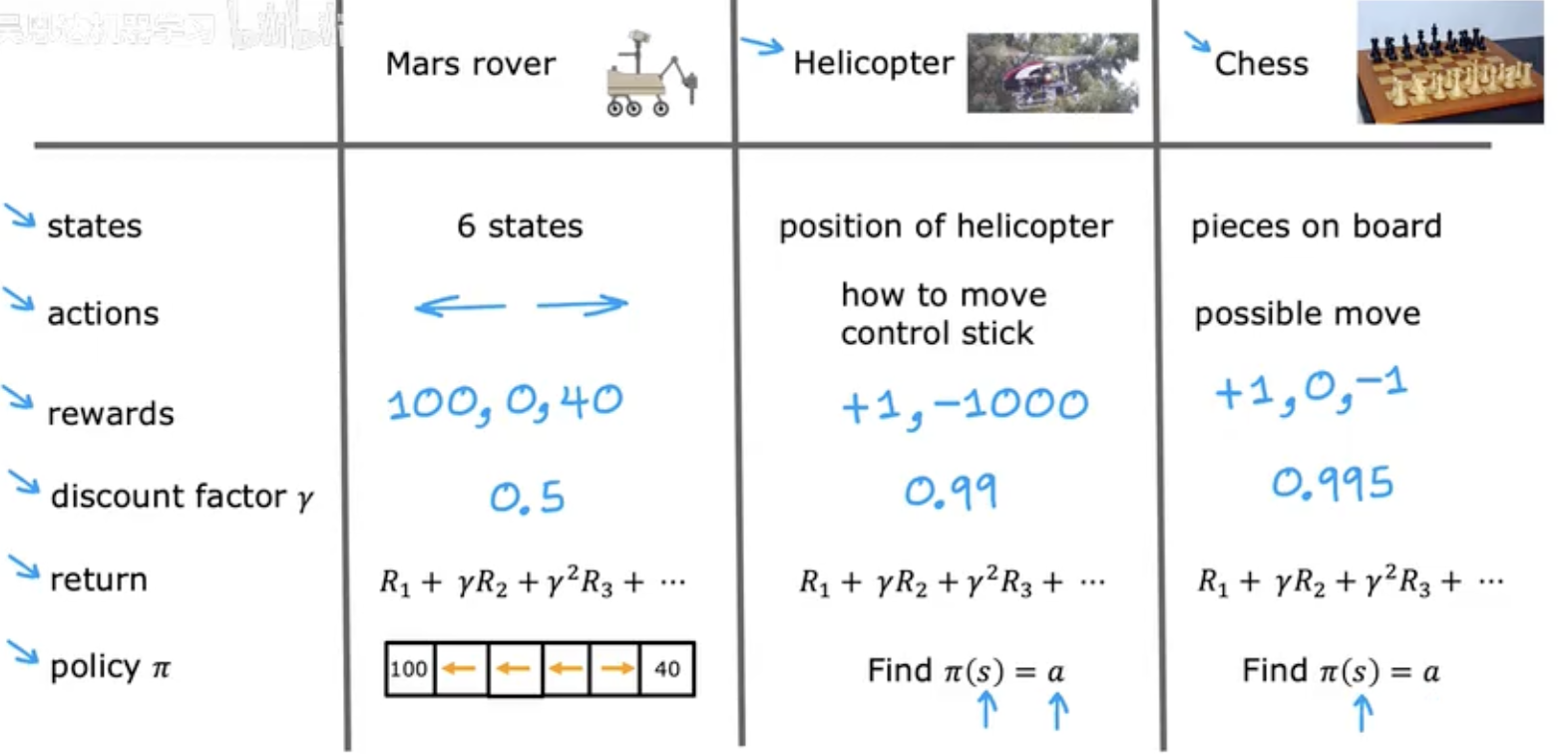

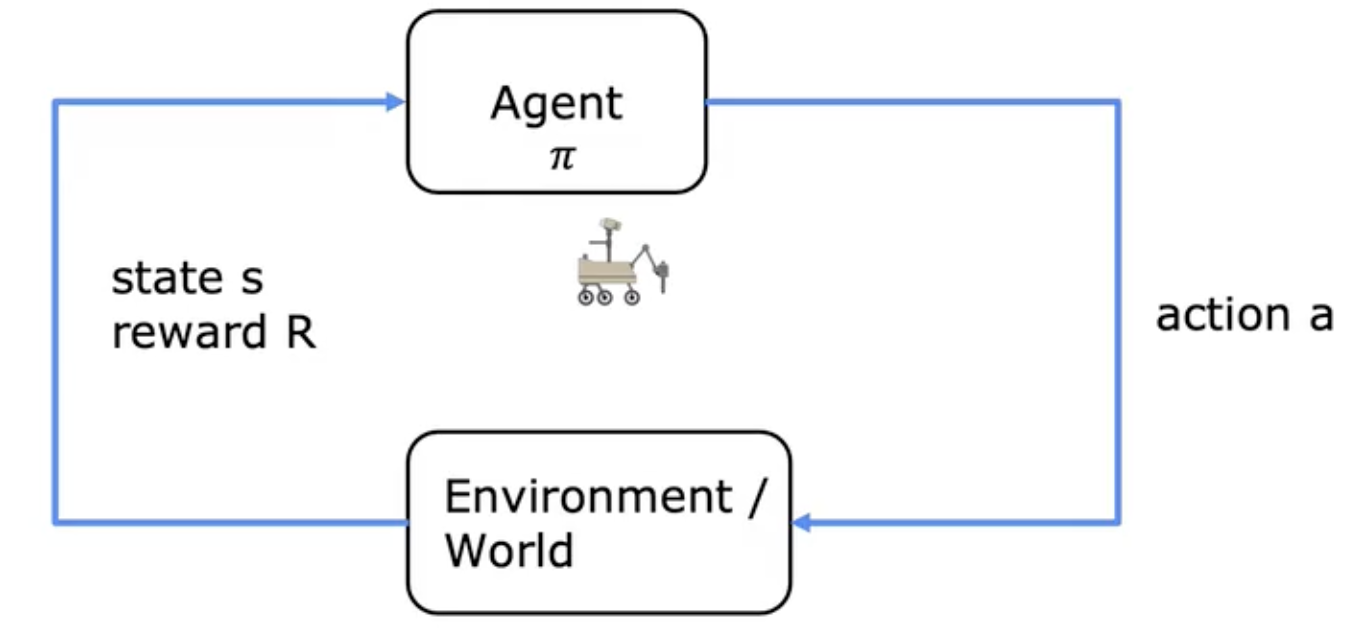

强化学习的形式化

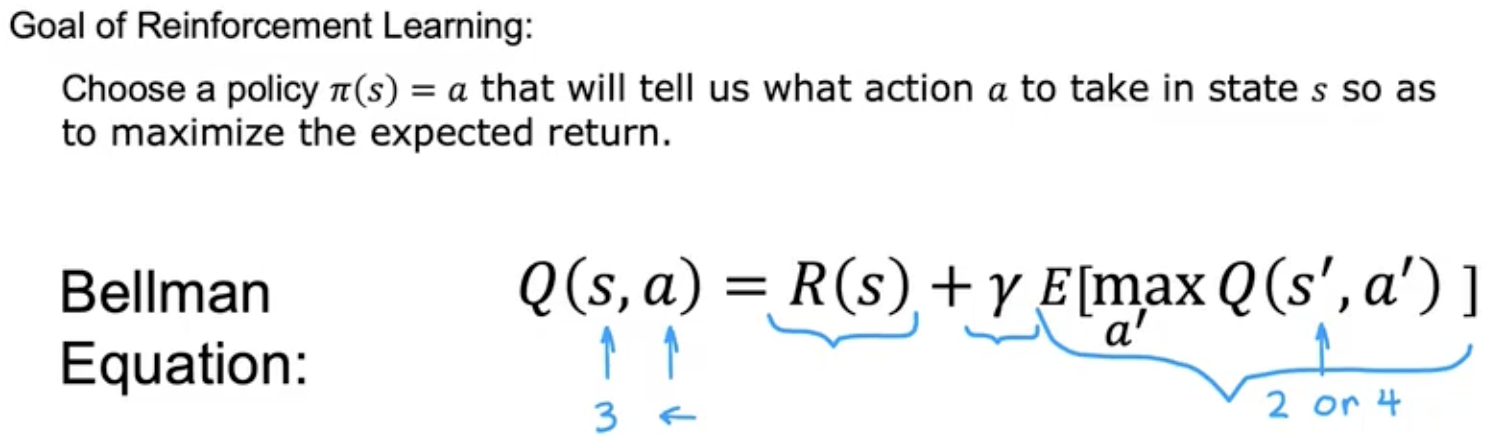

A policy is a function $\pi(s) = a$ mapping from states to actions, that tells you what $action \space a$ to take in a given $state \space s$.

goal: Find a $policy \space \pi$ that tells you what $action (a = (s))$ to take in every $state (s)$ so as to maximize the return.

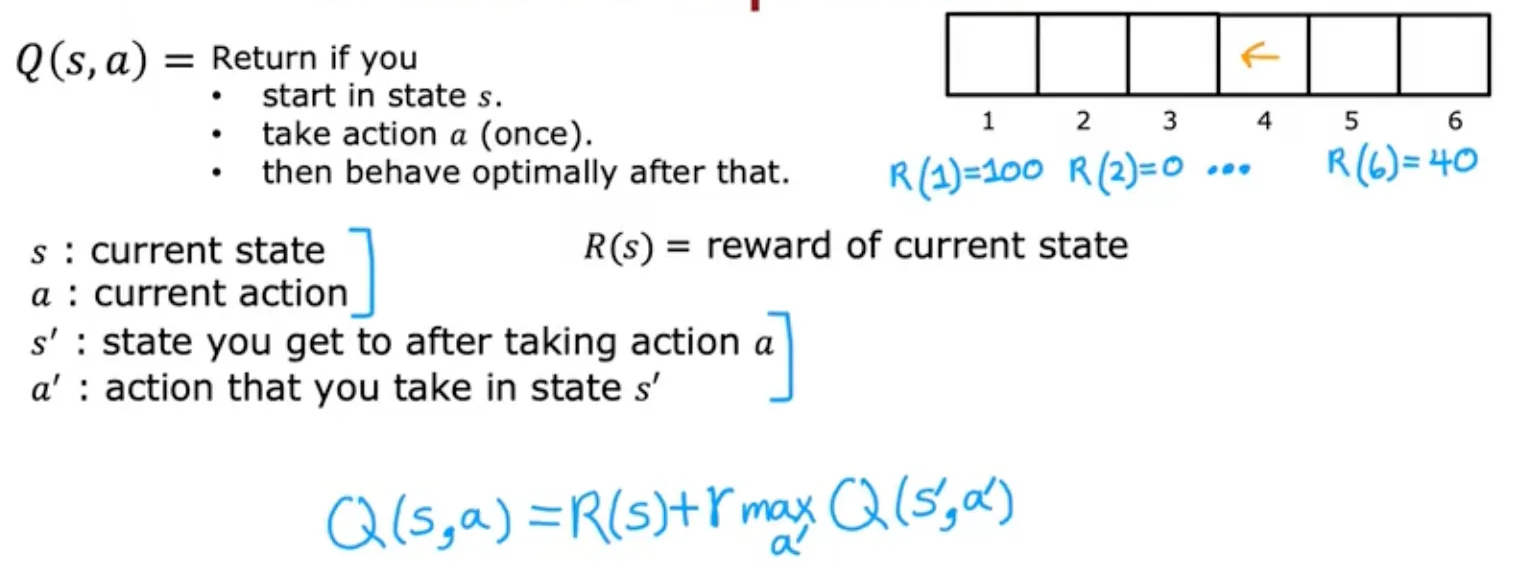

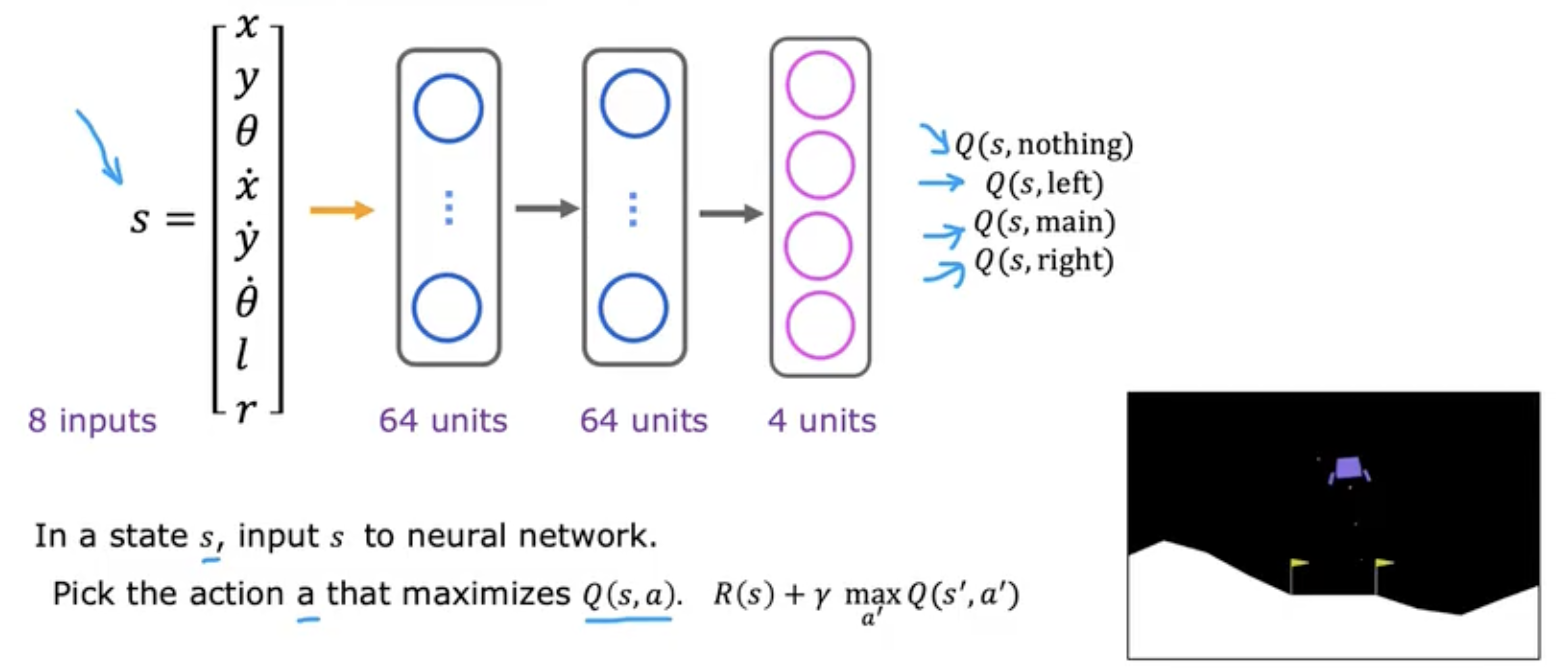

状态动作值函数(Q-Function)

Q(s,a) = Return if you:

- start in state s.

- take action a (once).

- then behave optimally after that.

The best possible return from state s is max$Q(s, a)$. The best possible action in state s is the action a that gives max$Q(s, a)$.

Markov Decision Process (MDP)

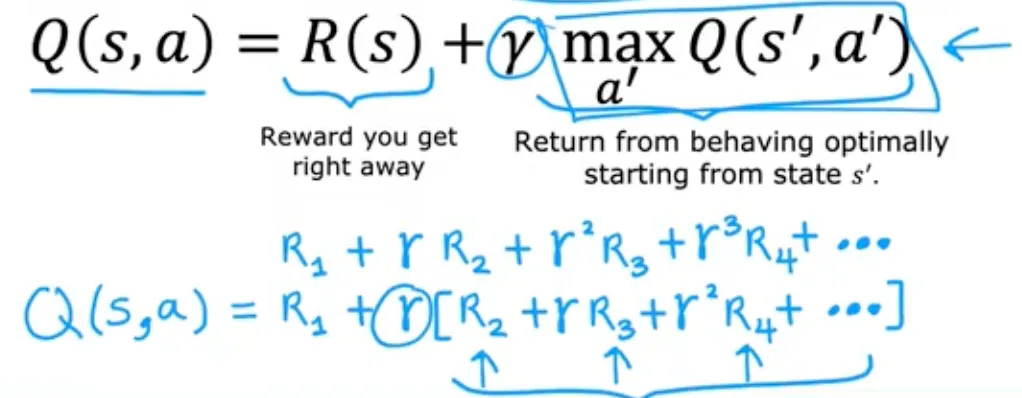

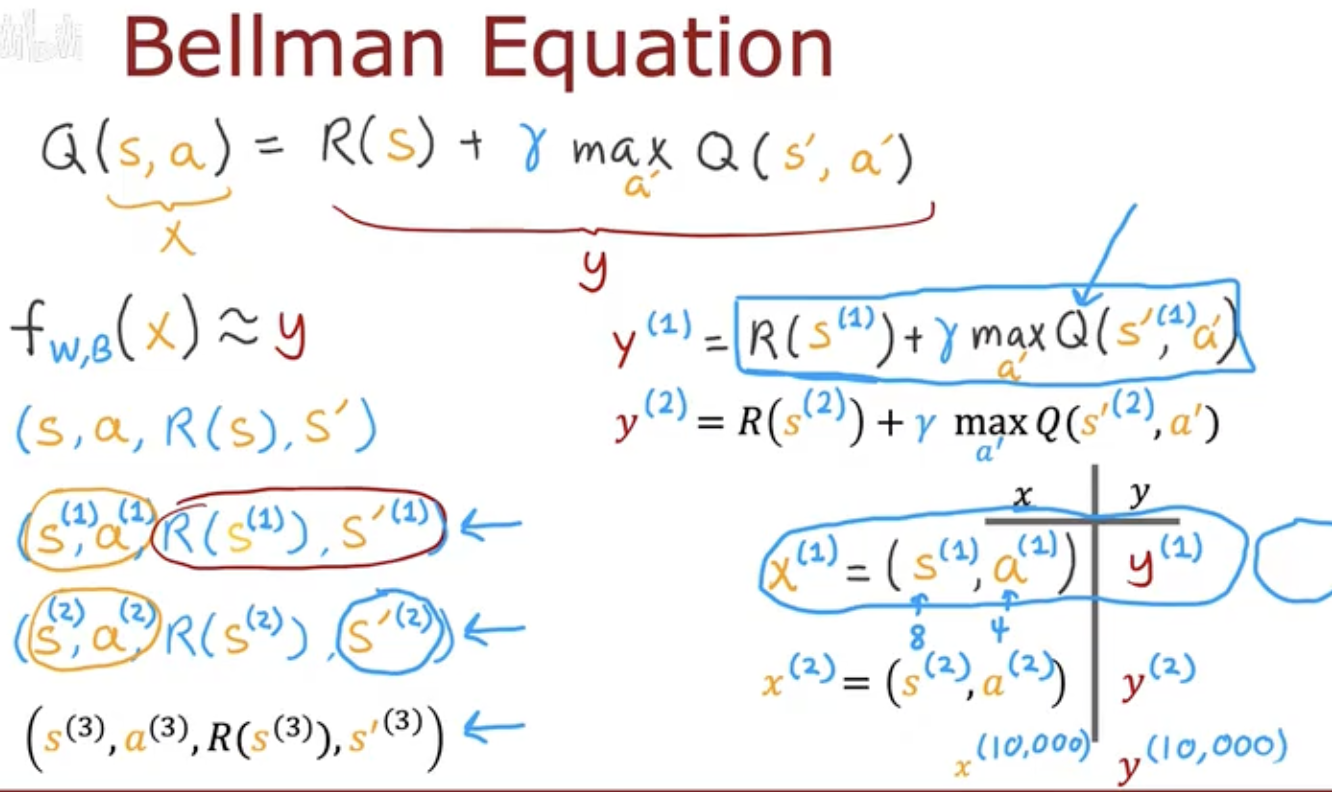

Bellman 方程

目标:计算状态动作值函数

贝尔曼方程:

由两部分组成:立即得到的奖励 + 将来得到的奖励

随机环境

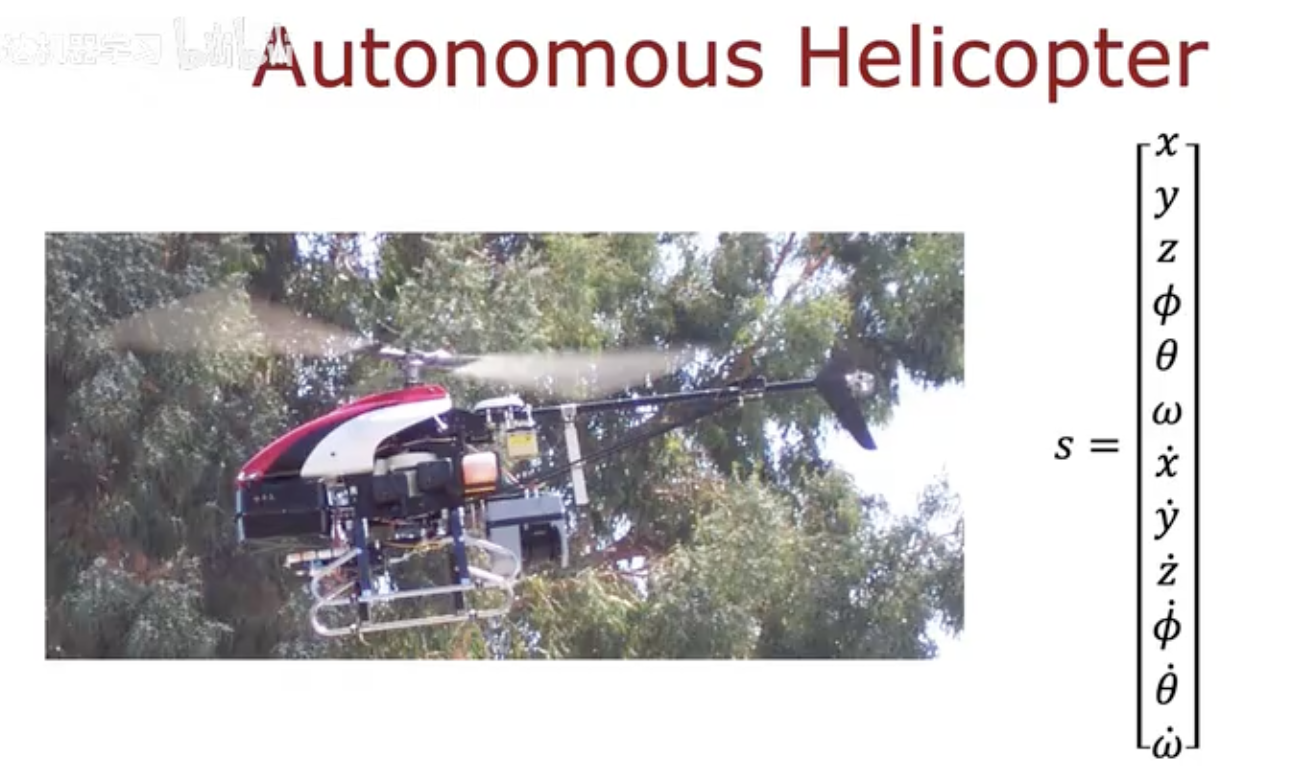

连续状态空间

直升机

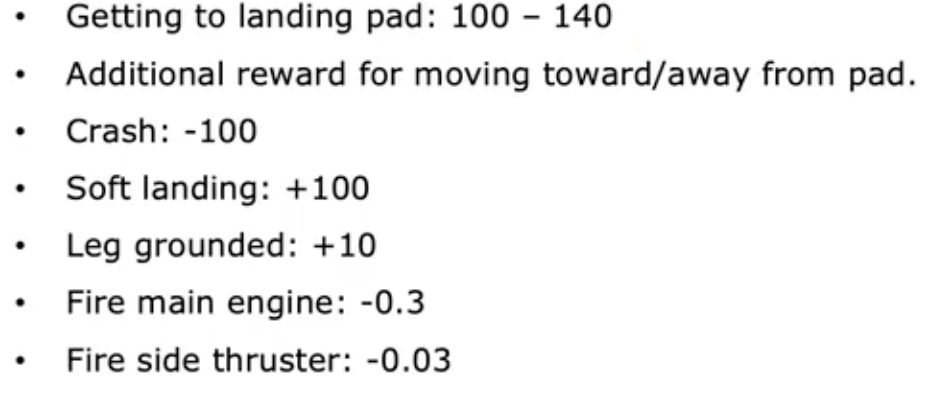

月球着陆器

l :左脚是否碰地

r: 右脚是否碰地

picks $action \space a = (s)$ so as to maximize the return.

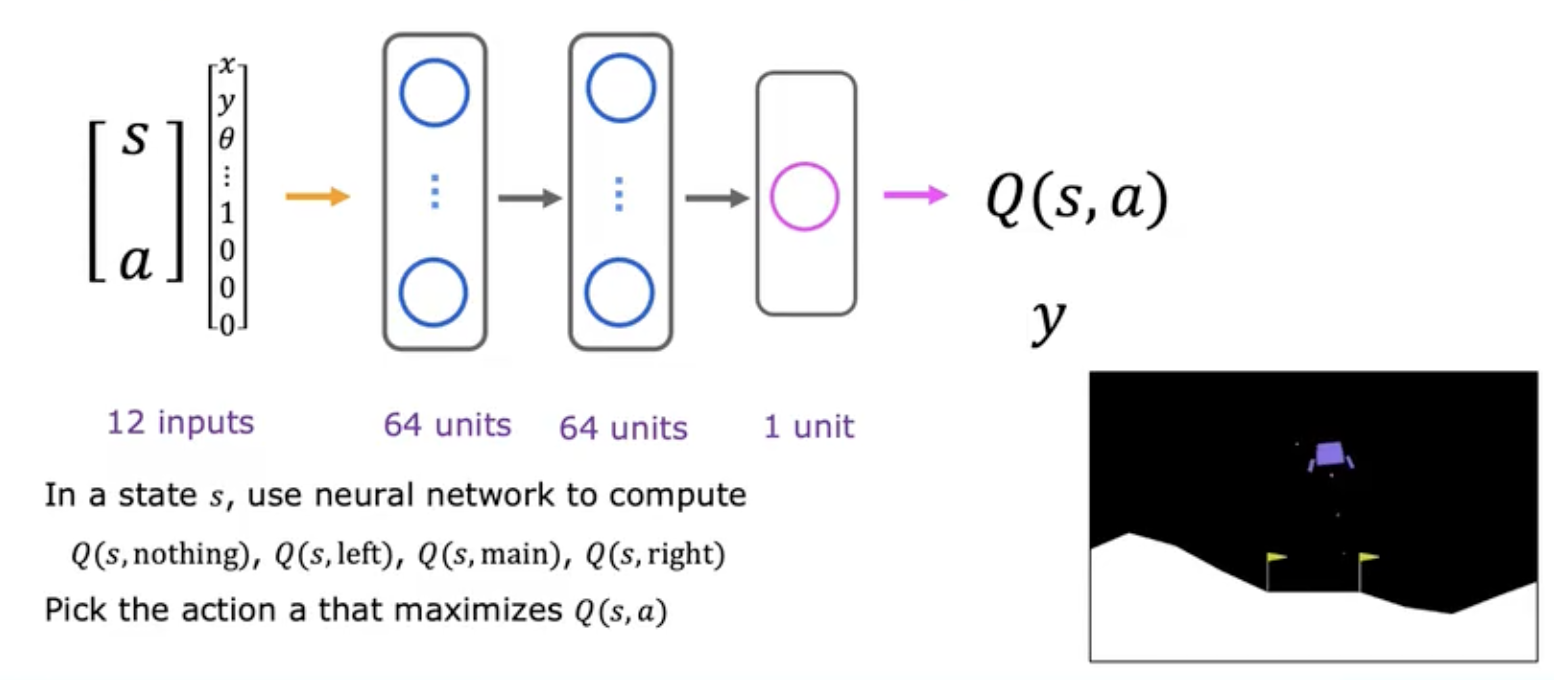

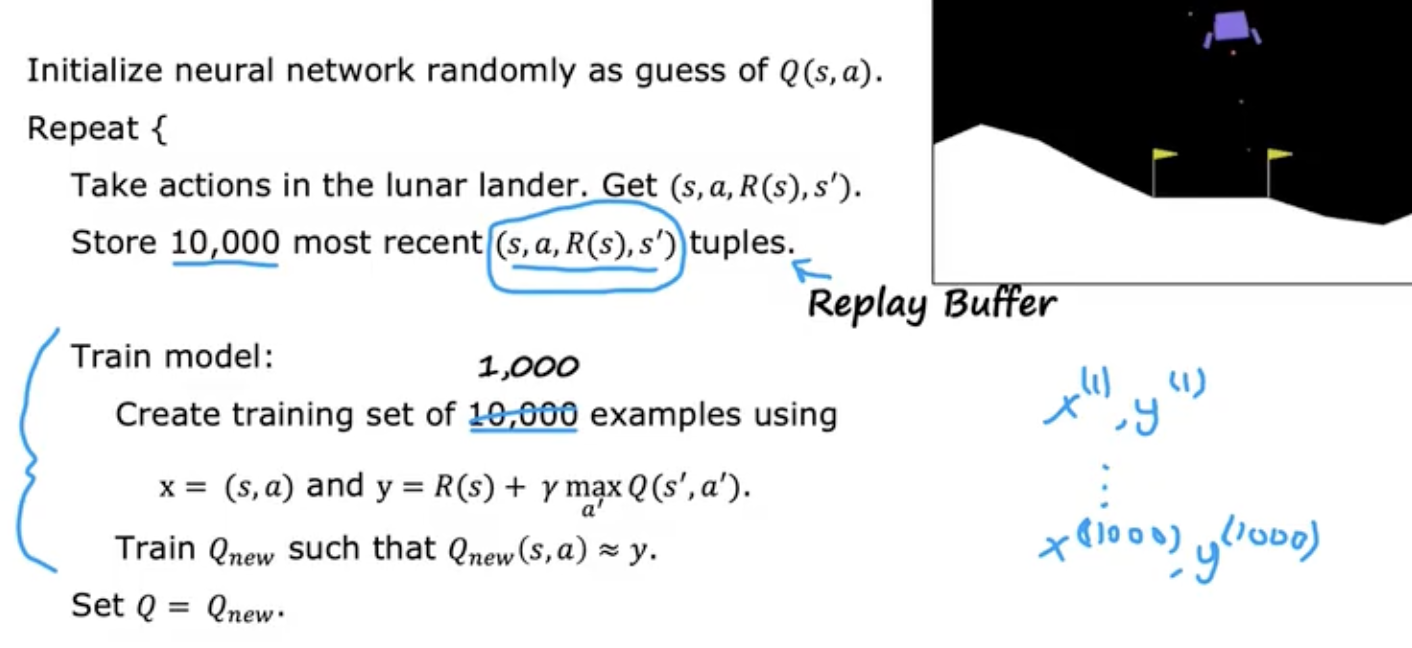

训练强化学习

强化学习核心思想:

Bellman Equation

(Q可以先随机猜测)

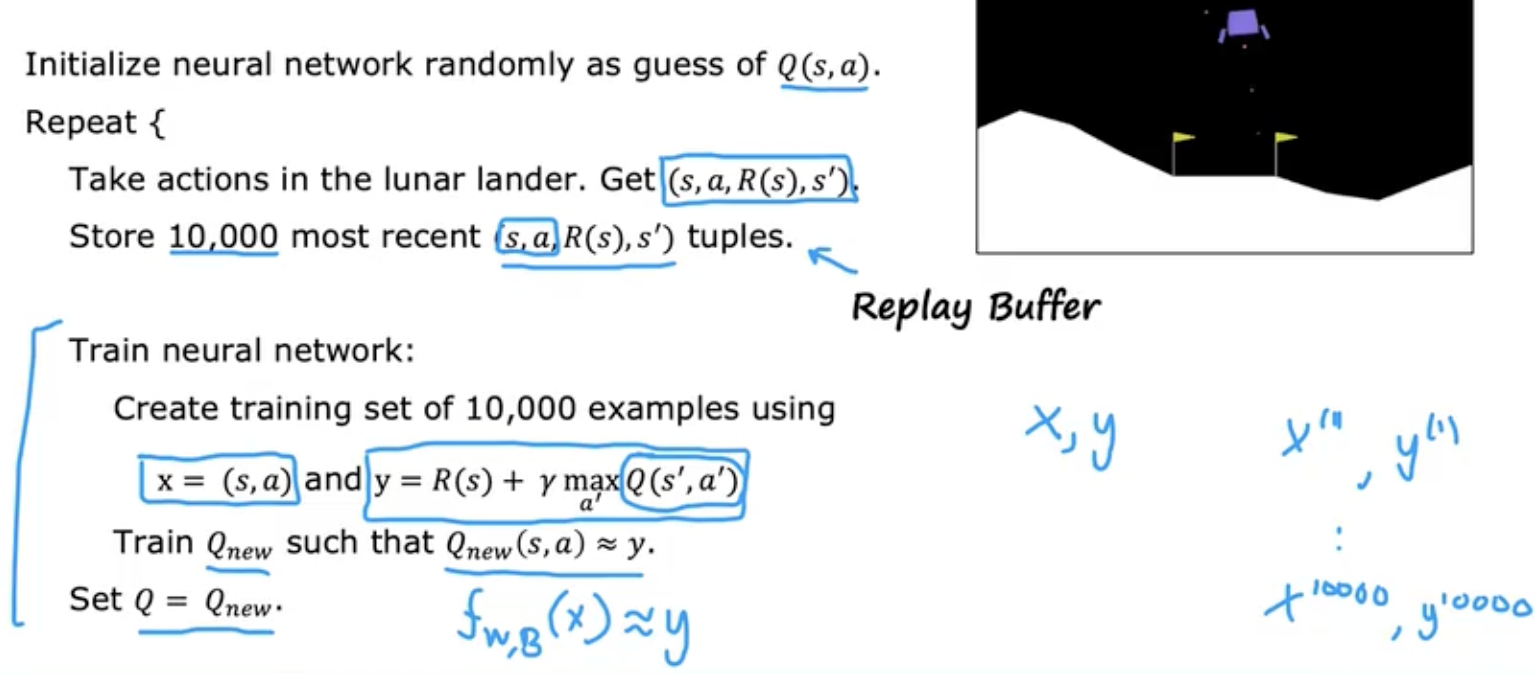

完整学习算法(DQN)

算法优化

改进的神经网络结构

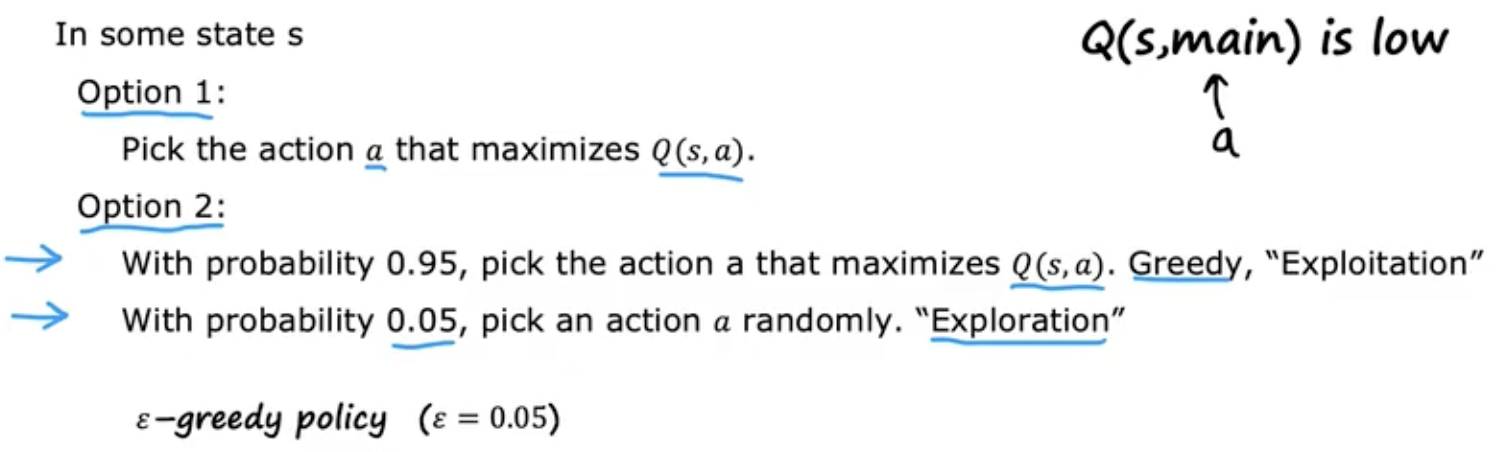

$\epsilon$-贪婪策略

刚开始$\epsilon$比较大,之后逐渐减小(之后更多利用Q,贪婪)

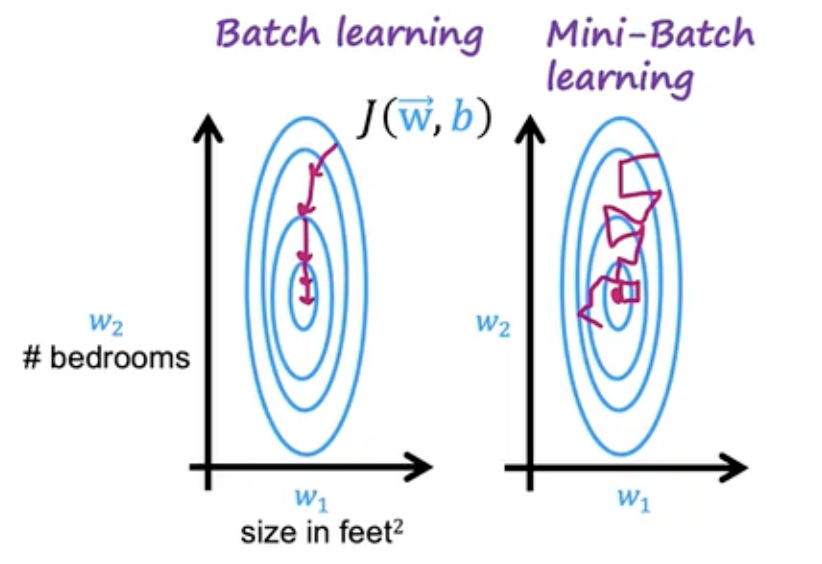

小批量和软更新

小批量

目标:加速

核心思想:每次迭代只使用部分数据来进行训练

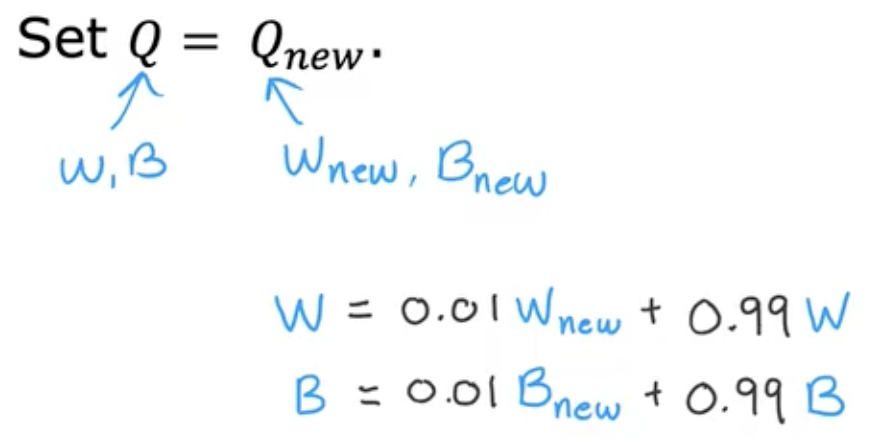

软更新

目标:更加收敛,减少震荡

关于强化学习的实际意义

- Much easier to get to work in a simulation than a real robot!

- Far fewer applications than supervised and unsupervised learning.

- But … exciting research direction with potential for future applications.