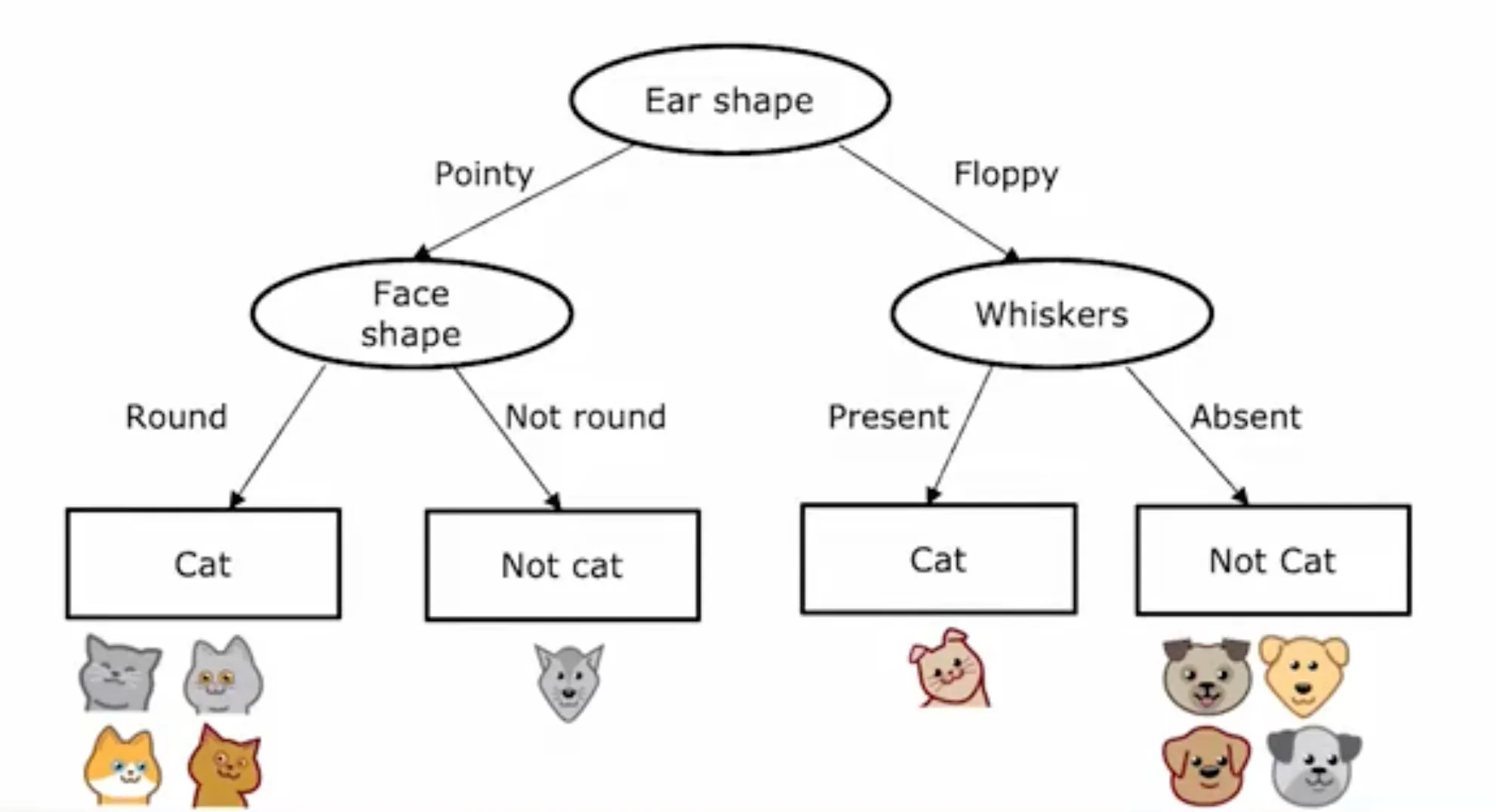

Decision Tree

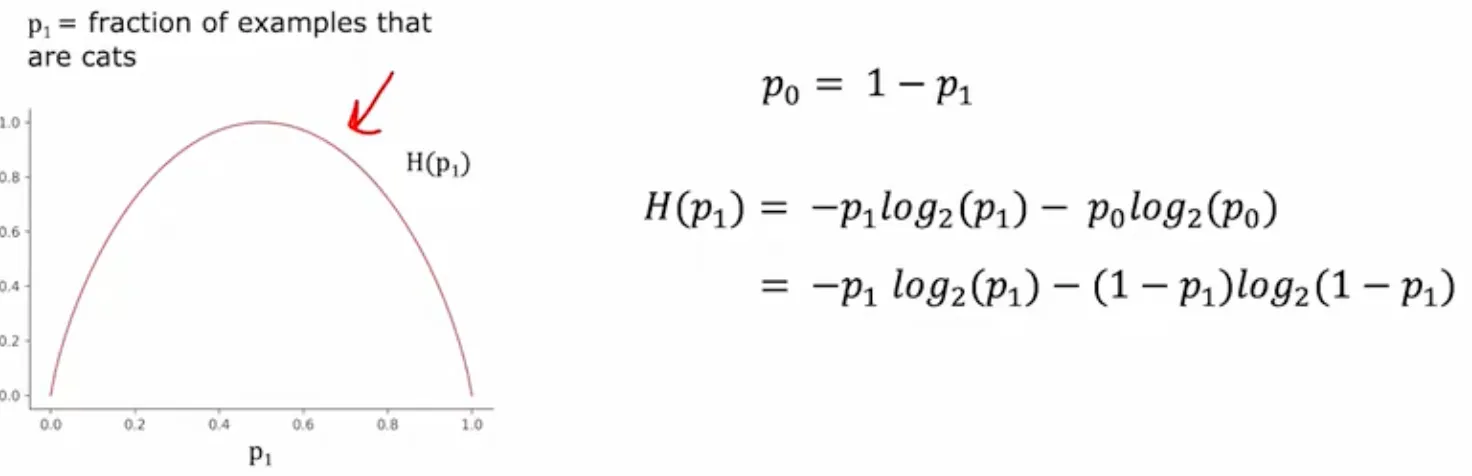

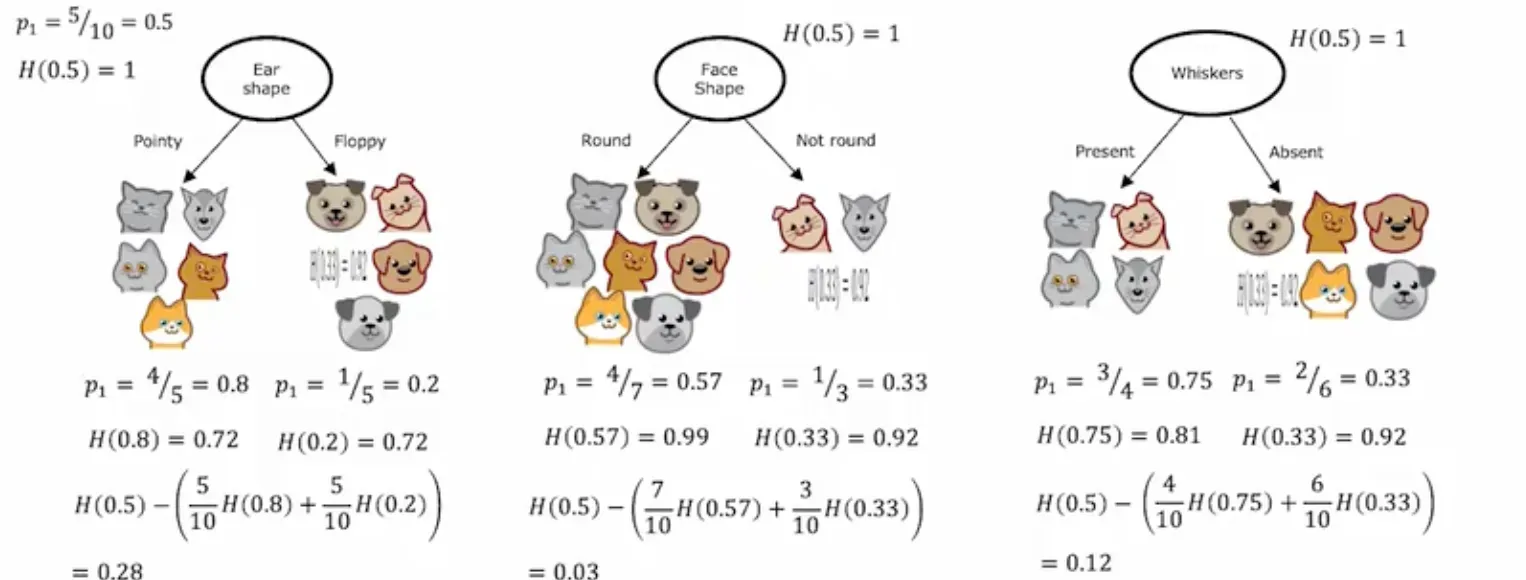

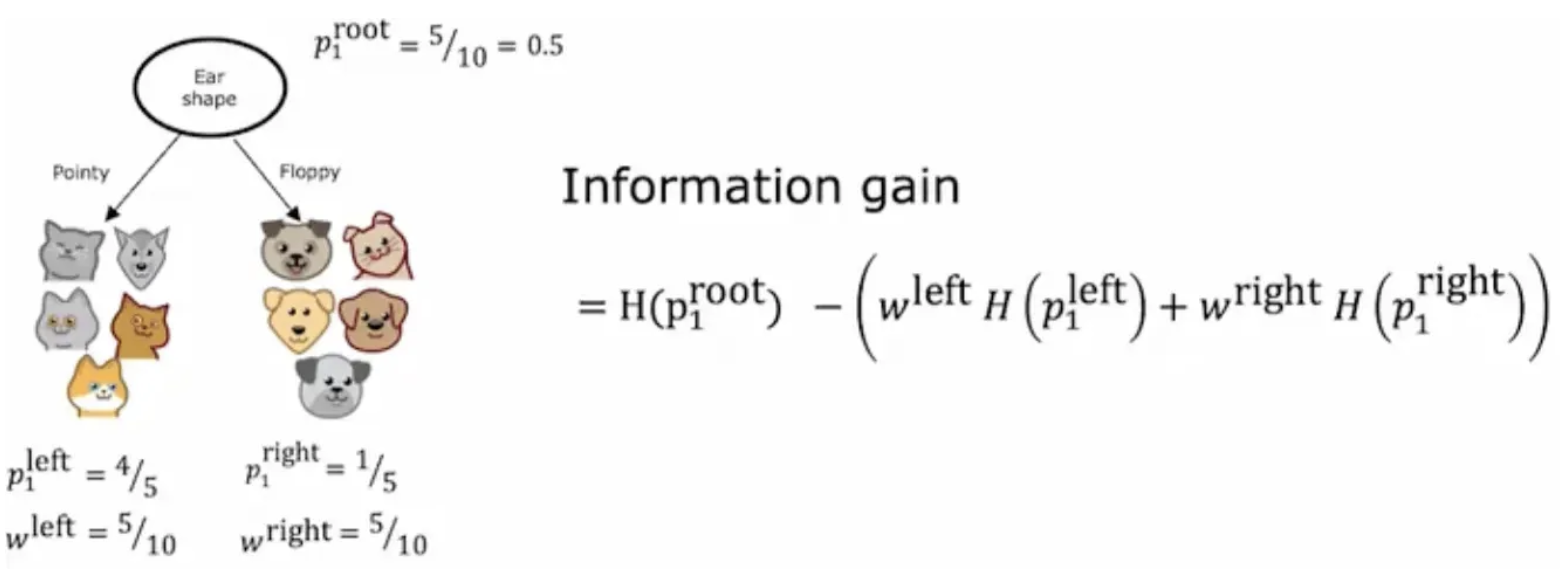

熵和信息增益

Measuring purity

选择信息增益更大的分裂特征

决策树训练(递归)

- Start with all examples at the root node

- Calculate information gain for all possible features, and pick the one with the highest information gain

- Split dataset according to selected feature, and create left and right branches of the tree

- Keep repeating splitting process until stopping criteria is met:

- When a node is 100% one class

- When splitting a node will result in the tree exceeding a maximum depth

- Information gain from additional splits is less than threshold

- When number of examples in a node is below a threshold

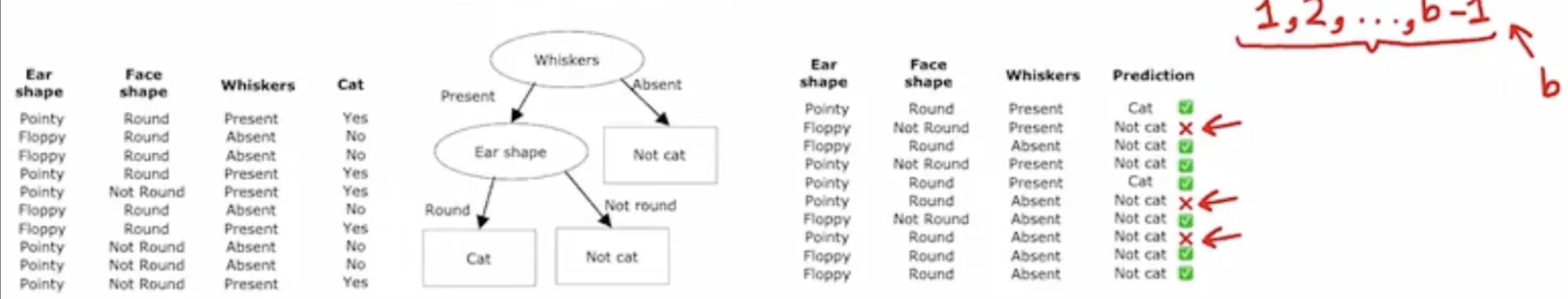

取值为多个离散值

创造独热编码

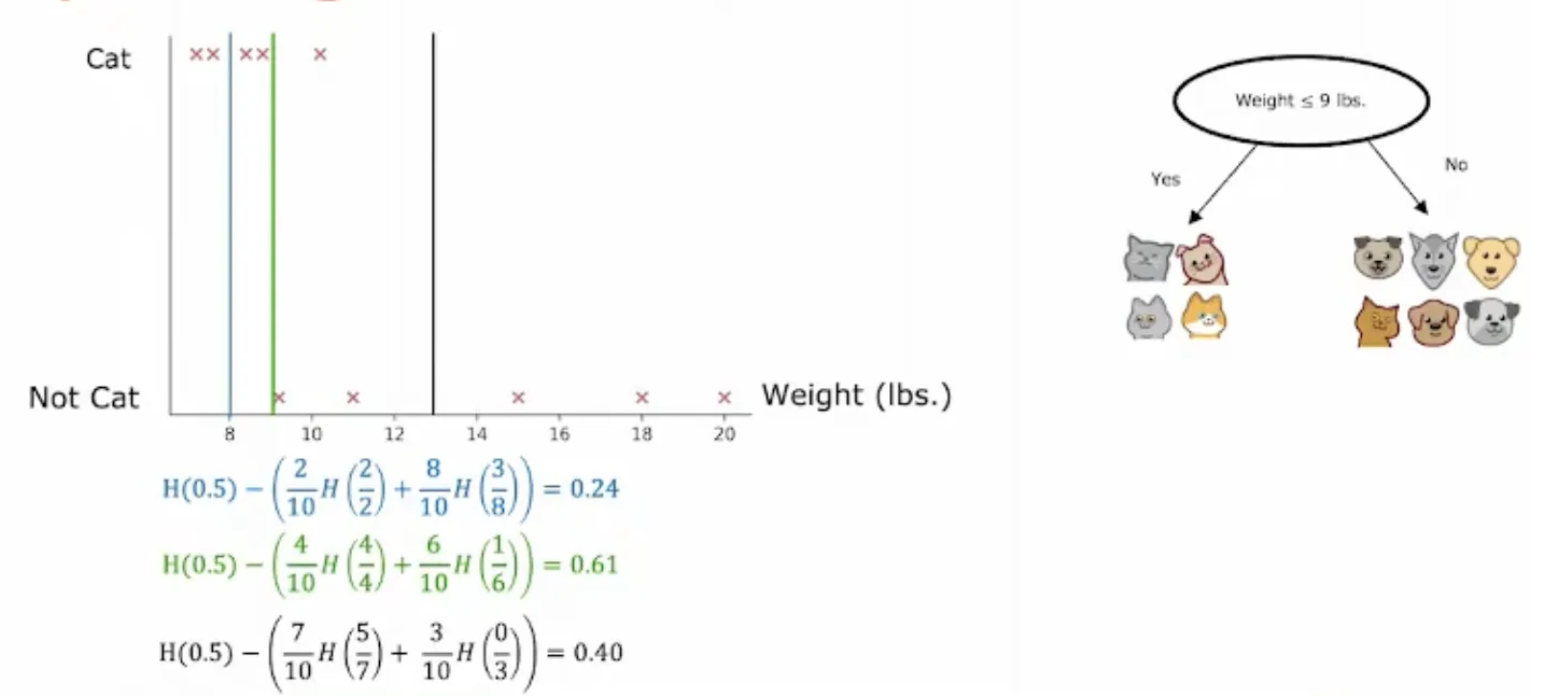

取值为连续值

随机森林

使用多个决策树

单个决策树的缺点:对数据太敏感

构造多个树,最后投票

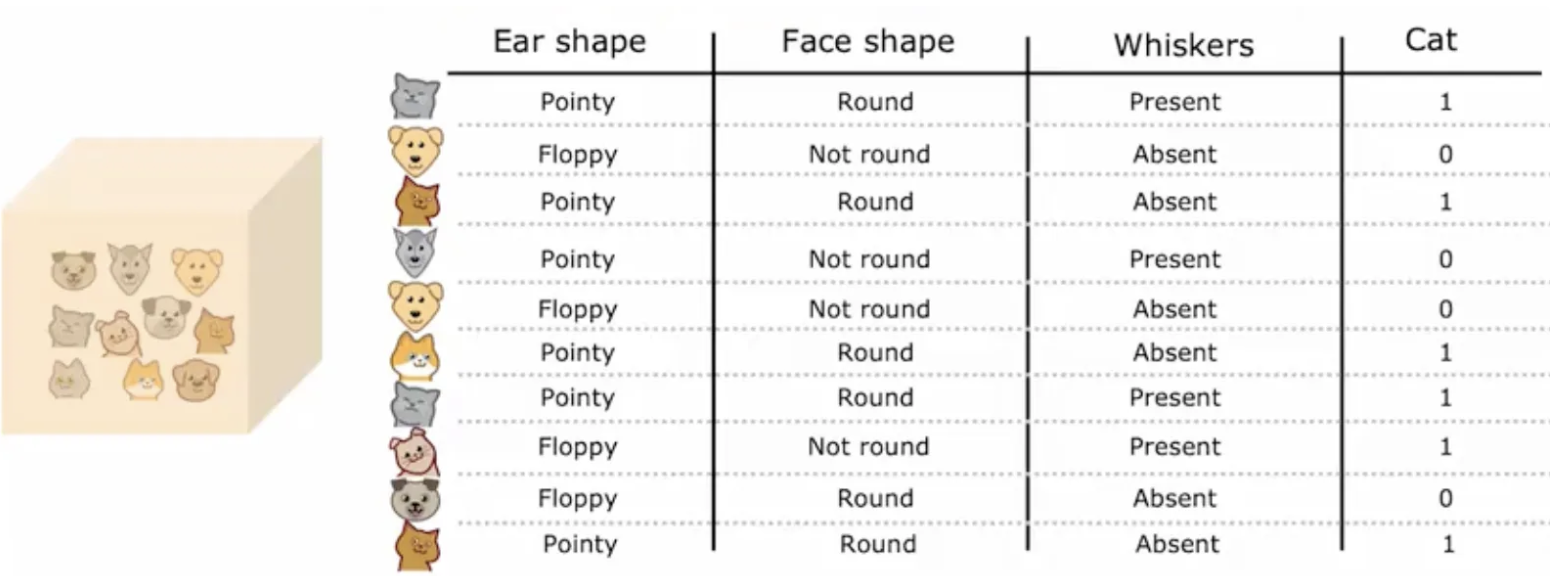

替换取样(Sampling with replacement)

构造一个新的数据集

随机森林算法

Given training set of size m

For b = 1 to B:

Use sampling with replacement to create a new training set of size m

Train a decision tree on the new

改进:Randomizing the feature choice

At each node, when choosing a feature to use to split, if n features are available, pick a random subset of k <n features and allow the algorithm to only choose from that subset of features.

更加健壮

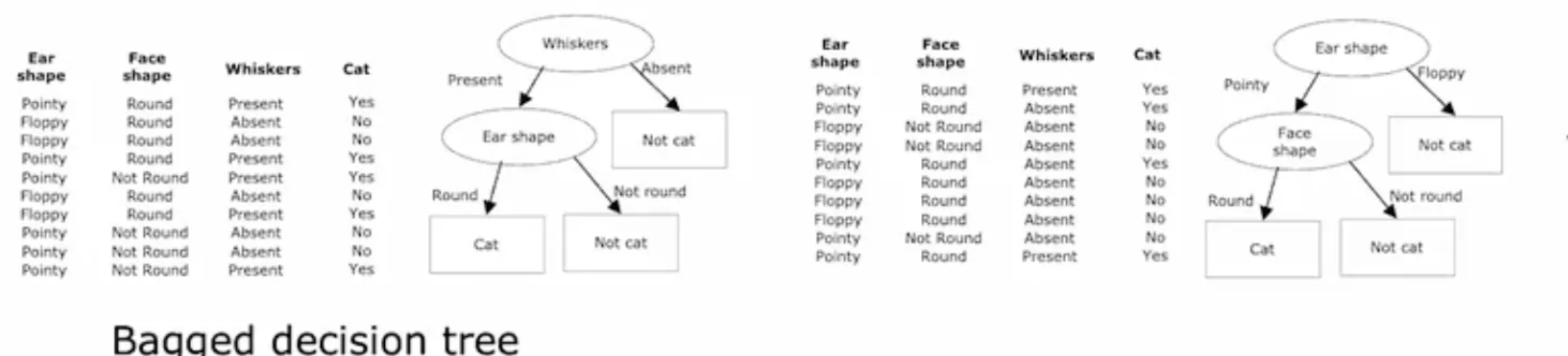

XGBoost

Boosted trees intuition

重点训练之前学得不好的部分

Given training set of size m

For b = 1 to B:

Use sampling with replacement to create a new training set of size m

But instead of picking from all examples with equal (1/m) probability, make it more likely to pick examples that the previously trained trees misclassify

Train a decision tree on the new dataset

XBoost (eXtreme Gradient Boosting)

- Open source implementation of boosted trees

- Fast efficient implementation

- Good choice of default splitting criteria and criteria for when to stop splitting

- Built in regularization to prevent overfitting

- Highly competitive algorithm for machine learning competitions (eg: Kaggle competitions)

- 给不同训练例子分配了不同的权重

from xgboost import XGBClassifier

model = XGBClassifier ()

model. fit(X_train, Y_train)

y_pred = model predict (X_test)

from xgboost import XGBRegressor

model = XGBRegressor ()

model.fit(X_train, y_train)

y_pred = model predict (X_test)

Decision Trees vs Neural Networks

Decision Trees and Tree ensembles

一次训练一棵树

- Works well on tabular (structured) data

- Not recommended for unstructured data (images, audio, text)

- Fast

- Small decision trees may be human interpretable

Neural Networks

用梯度下降训练

- Works well on all types of data, including tabular (structured) and unstructured data

- May be slower than a decision tree

- Works with transfer learning

- When building a system of multiple models working together, it might be easier to string together multiple neural networks