langchain - Agent

可将 Agent 视为 state machine

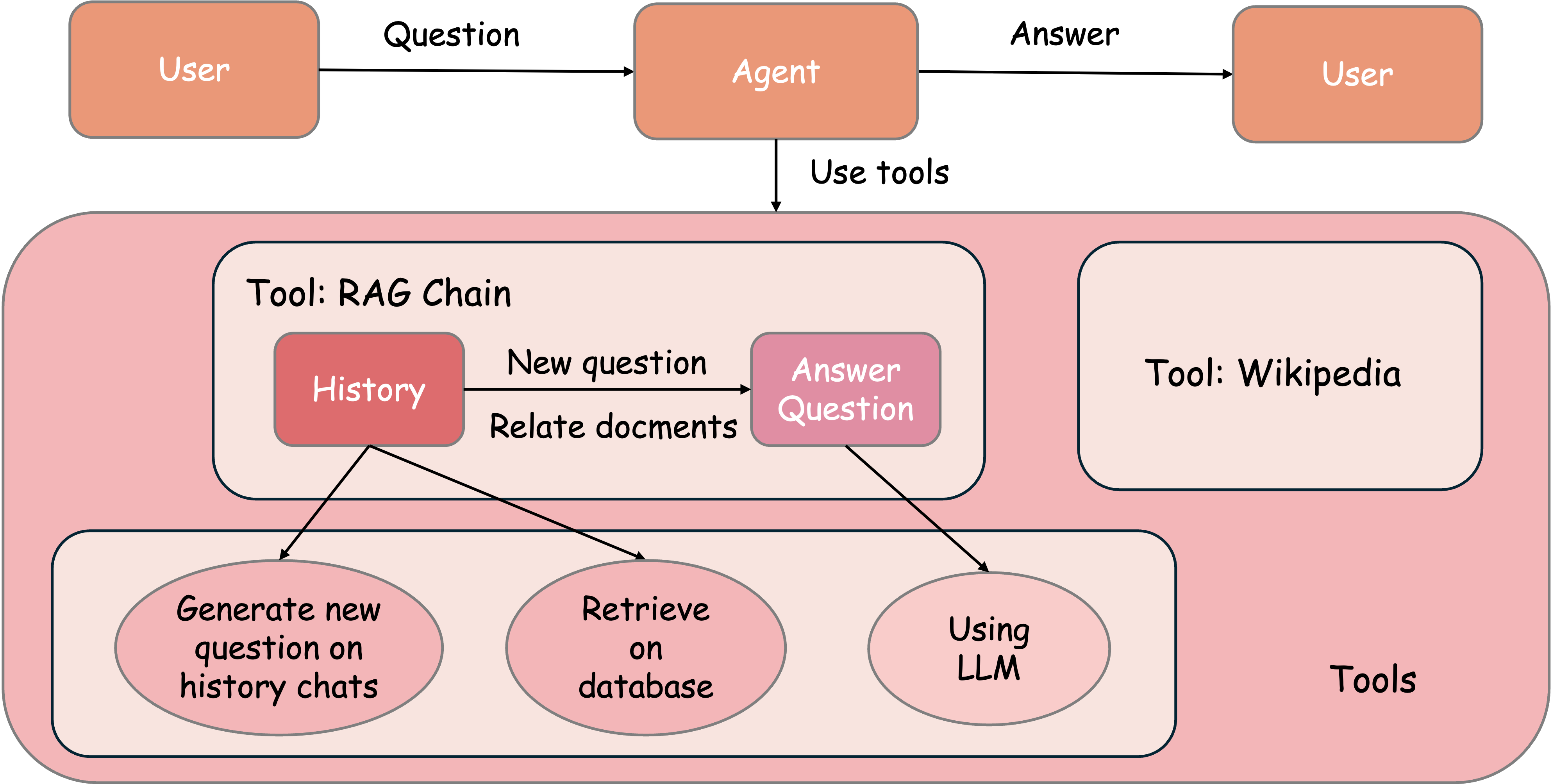

agent_react_docstore

关键代码

# Set Up ReAct Agent with Document Store Retriever

# Load the ReAct Docstore Prompt

react_docstore_prompt = hub.pull("hwchase17/react")

tools = [

Tool(

name="Answer Question",

func=lambda input, **kwargs: rag_chain.invoke(

{"input": input, "chat_history": kwargs.get("chat_history", [])}

),

description="useful for when you need to answer questions about the context",

)

]

# Create the ReAct Agent with document store retriever

agent = create_react_agent(

llm=llm,

tools=tools,

prompt=react_docstore_prompt,

)

agent_executor = AgentExecutor.from_agent_and_tools(

agent=agent, tools=tools, handle_parsing_errors=True, verbose=True,

)

完整代码

import os

from dotenv import load_dotenv

from langchain import hub

from langchain.agents import AgentExecutor, create_react_agent

from langchain.chains import create_history_aware_retriever, create_retrieval_chain

from langchain.chains.combine_documents import create_stuff_documents_chain

from langchain_community.vectorstores import Chroma

from langchain_core.messages import AIMessage, HumanMessage

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

from langchain_core.tools import Tool

from langchain_openai import ChatOpenAI, OpenAIEmbeddings

# Load environment variables from .env file

load_dotenv()

# Load the existing Chroma vector store

current_dir = os.path.dirname(os.path.abspath(__file__))

db_dir = os.path.join(current_dir, "..", "..", "4_rag", "db")

persistent_directory = os.path.join(db_dir, "chroma_db_with_metadata")

# Check if the Chroma vector store already exists

if os.path.exists(persistent_directory):

print("Loading existing vector store...")

db = Chroma(persist_directory=persistent_directory,

embedding_function=None)

else:

raise FileNotFoundError(

f"The directory {persistent_directory} does not exist. Please check the path."

)

# Define the embedding model

embeddings = OpenAIEmbeddings(model="text-embedding-3-small")

# Load the existing vector store with the embedding function

db = Chroma(persist_directory=persistent_directory,

embedding_function=embeddings)

# Create a retriever for querying the vector store

# `search_type` specifies the type of search (e.g., similarity)

# `search_kwargs` contains additional arguments for the search (e.g., number of results to return)

retriever = db.as_retriever(

search_type="similarity",

search_kwargs={"k": 3},

)

# Create a ChatOpenAI model

llm = ChatOpenAI(model="gpt-4o")

# Contextualize question prompt

# This system prompt helps the AI understand that it should reformulate the question

# based on the chat history to make it a standalone question

contextualize_q_system_prompt = (

"Given a chat history and the latest user question "

"which might reference context in the chat history, "

"formulate a standalone question which can be understood "

"without the chat history. Do NOT answer the question, just "

"reformulate it if needed and otherwise return it as is."

)

# Create a prompt template for contextualizing questions

contextualize_q_prompt = ChatPromptTemplate.from_messages(

[

("system", contextualize_q_system_prompt),

MessagesPlaceholder("chat_history"),

("human", "{input}"),

]

)

# Create a history-aware retriever

# This uses the LLM to help reformulate the question based on chat history

history_aware_retriever = create_history_aware_retriever(

llm, retriever, contextualize_q_prompt

)

# Answer question prompt

# This system prompt helps the AI understand that it should provide concise answers

# based on the retrieved context and indicates what to do if the answer is unknown

qa_system_prompt = (

"You are an assistant for question-answering tasks. Use "

"the following pieces of retrieved context to answer the "

"question. If you don't know the answer, just say that you "

"don't know. Use three sentences maximum and keep the answer "

"concise."

"\n\n"

"{context}"

)

# Create a prompt template for answering questions

qa_prompt = ChatPromptTemplate.from_messages(

[

("system", qa_system_prompt),

MessagesPlaceholder("chat_history"),

("human", "{input}"),

]

)

# Create a chain to combine documents for question answering

# `create_stuff_documents_chain` feeds all retrieved context into the LLM

question_answer_chain = create_stuff_documents_chain(llm, qa_prompt)

# Create a retrieval chain that combines the history-aware retriever and the question answering chain

rag_chain = create_retrieval_chain(

history_aware_retriever, question_answer_chain)

# Set Up ReAct Agent with Document Store Retriever

# Load the ReAct Docstore Prompt

react_docstore_prompt = hub.pull("hwchase17/react")

tools = [

Tool(

name="Answer Question",

func=lambda input, **kwargs: rag_chain.invoke(

{"input": input, "chat_history": kwargs.get("chat_history", [])}

),

description="useful for when you need to answer questions about the context",

)

]

# Create the ReAct Agent with document store retriever

agent = create_react_agent(

llm=llm,

tools=tools,

prompt=react_docstore_prompt,

)

agent_executor = AgentExecutor.from_agent_and_tools(

agent=agent, tools=tools, handle_parsing_errors=True, verbose=True,

)

chat_history = []

while True:

query = input("You: ")

if query.lower() == "exit":

break

response = agent_executor.invoke(

{"input": query, "chat_history": chat_history})

print(f"AI: {response['output']}")

# Update history

chat_history.append(HumanMessage(content=query))

chat_history.append(AIMessage(content=response["output"]))

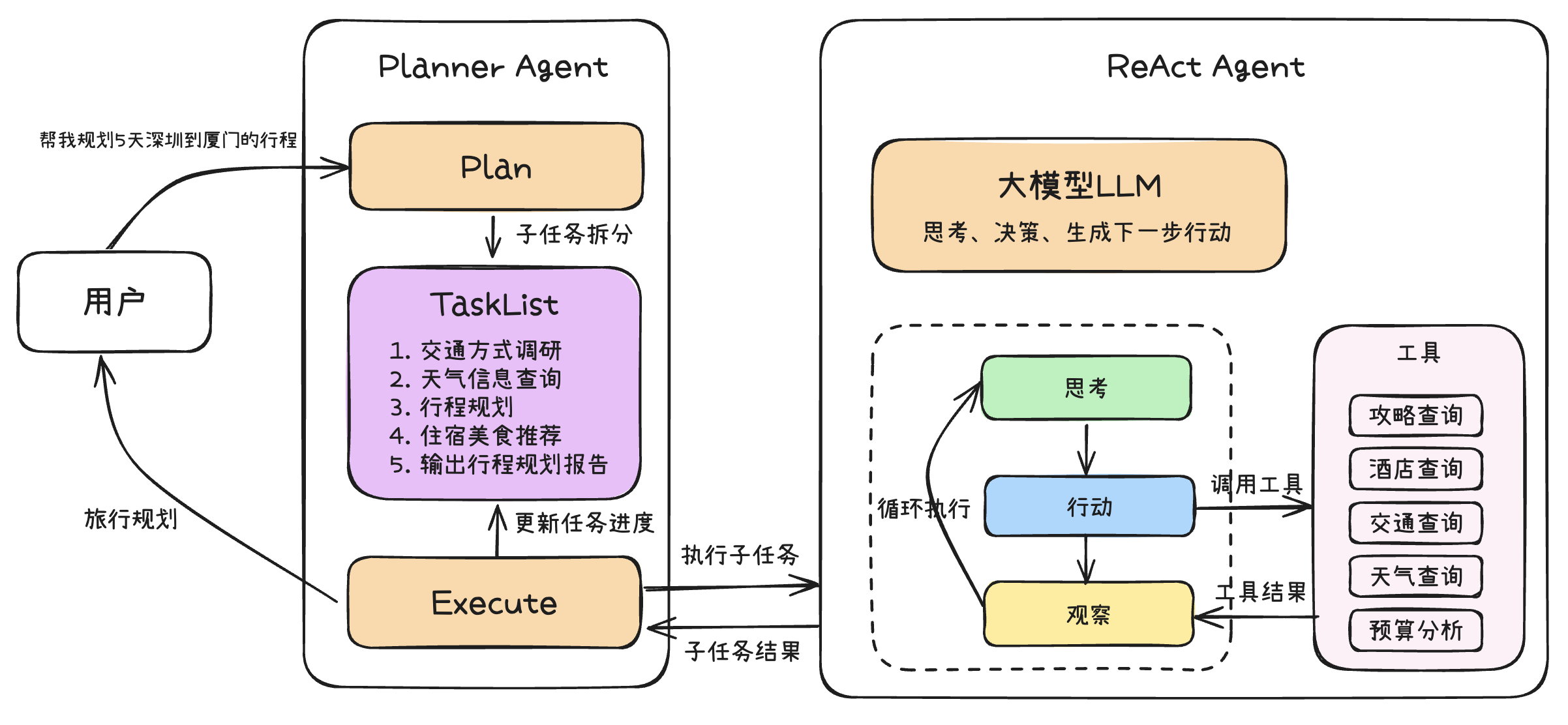

agent 框架

CoT:引导模型在给出最终答案前先生成一系列结构化的中间推理步骤。

缺陷:生成冗长的思考链条会增加计算成本和处理延迟。

ReAct:将 Reasoning 与 Action 相结合,允许模型在推理过程中与外部工具或环境进行互动,从而获取最新信息、执行具体操作,并根据反馈调整后续步骤。

Plan-and-Execute:ReAct 的扩展,将 agent 工作流程明确划分为规划和执行两个阶段。