Below you will find pages that utilize the taxonomy term “Machine Learning”

January 11, 2025

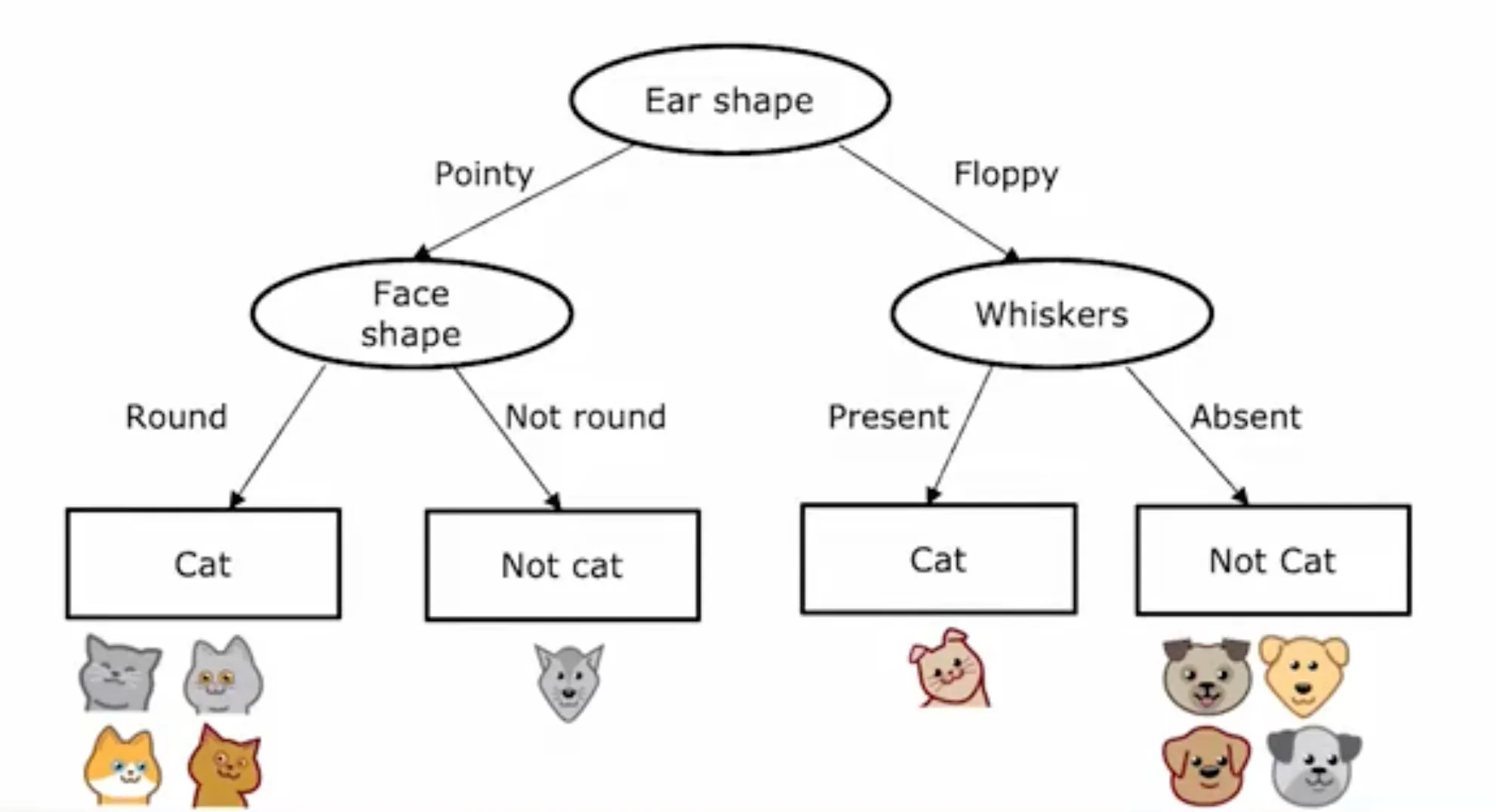

Decision Tree

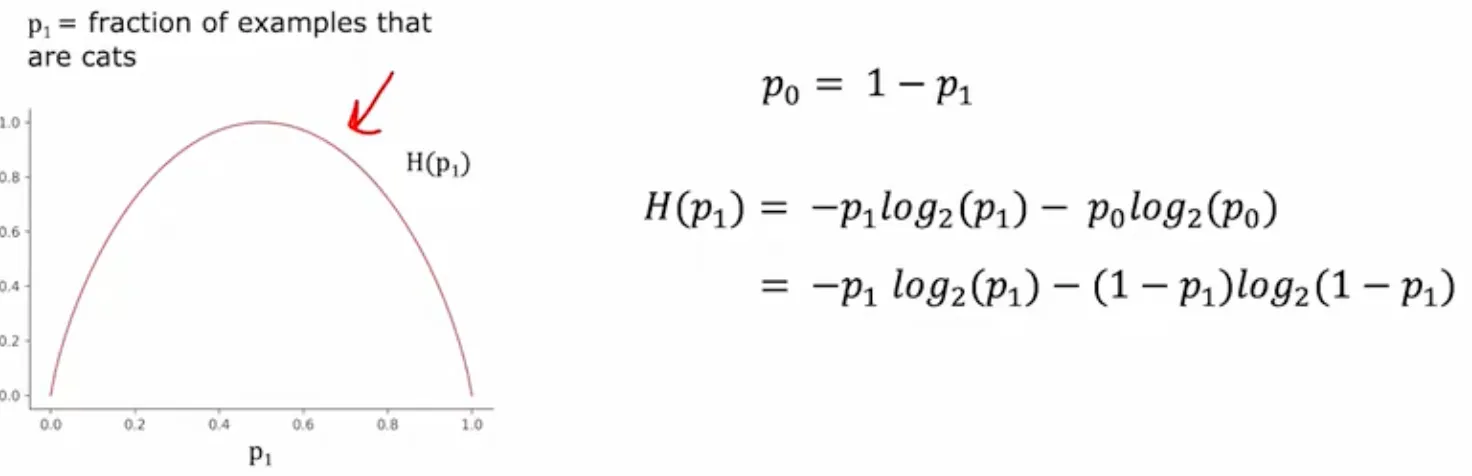

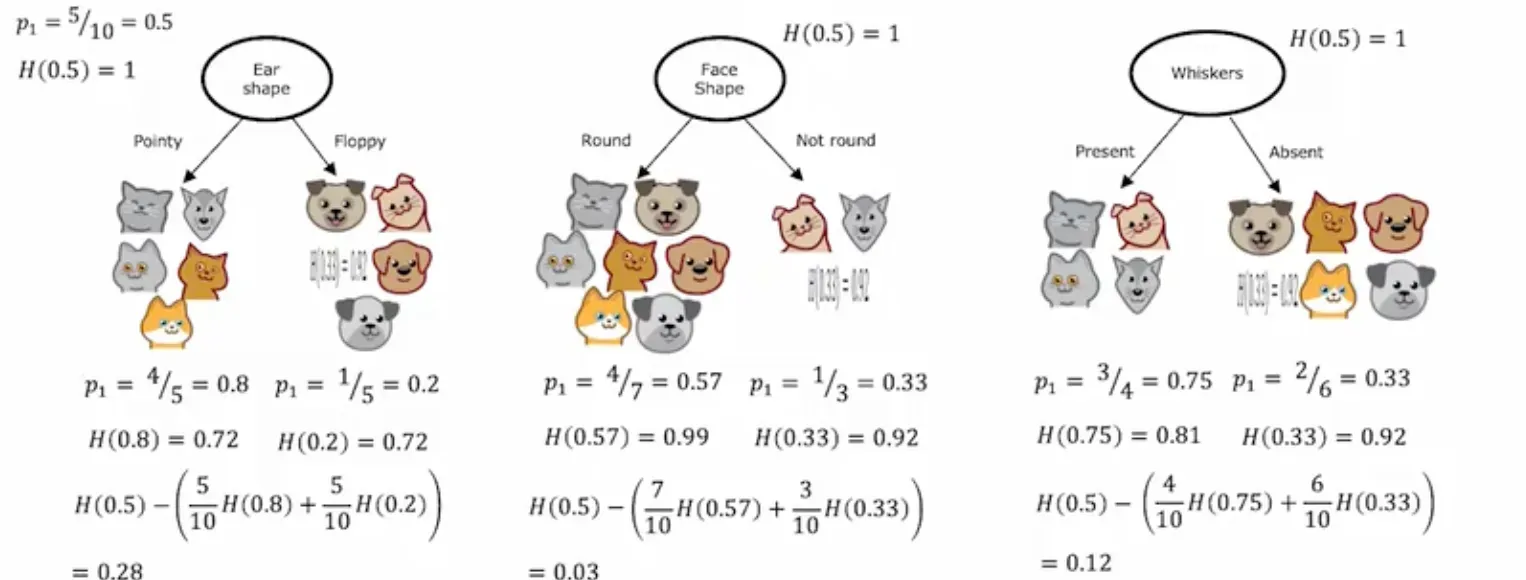

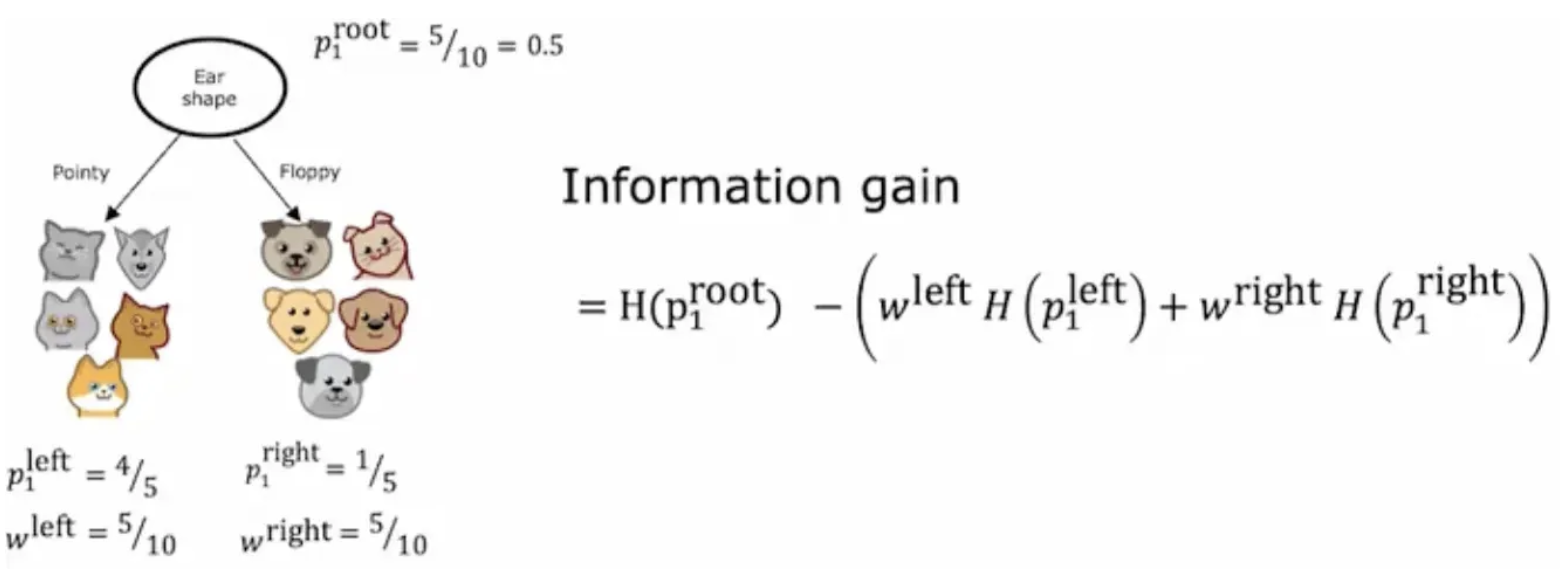

熵和信息增益

Measuring purity

选择信息增益更大的分裂特征

决策树训练(递归)

- Start with all examples at the root node

- Calculate information gain for all possible features, and pick the one with the highest information gain

- Split dataset according to selected feature, and create left and right branches of the tree

- Keep repeating splitting process until stopping criteria is met:

- When a node is 100% one class

- When splitting a node will result in the tree exceeding a maximum depth

- Information gain from additional splits is less than threshold

- When number of examples in a node is below a threshold